- AI Threads

- Posts

- GPT 5: Here’s why product velocity now beats raw model gains

GPT 5: Here’s why product velocity now beats raw model gains

Also - is it any good?!

The release of GPT‑5 is a milestone, not because it vaults us into Artificial General Intelligence (AGI), but because it makes AI systems far more usable in the real world. OpenAI’s new flagship is a system, not a single monolithic LLM. It blends a fast chat model, a deeper “thinking” model, and a real‑time router that chooses between them - and then gets out of the way so users and developers don’t have to. That shift signals where value will come from over the next 12‑18 months: products that orchestrate multiple models, tools, and data sources into dependable workflows.

What GPT-5 Actually Changed

System of models, routed automatically. ChatGPT now auto switches between GPT 5 Chat and GPT 5 Thinking. A router decides when to “think longer” based on task complexity, available tools, and explicit user intent - the key point is that users no longer need to pick models to get strong answers.

Developer control surfaces. In the Application Programming Intelligence (API), GPT 5 adds a reasoning_effort control - including a new minimal mode - plus a verbosity parameter and plaintext “custom tools”. These are product builder features that make systems cheaper, faster, and easier to steer.

Benchmarks that matter to shipping software. GPT 5 raises state of the art on real world engineering tasks - e.g. 74.9% on SWE bench Verified and ~97% on τ² bench tool use - and improves long context retrieval and instruction following, which shows up as fewer stuck agents and more reliable multi step execution.

Enterprise scale and safety. The model introduces safe completions to improve both helpfulness and safety for dual use prompts - a pragmatic upgrade for regulated and high stakes settings. Rollout prioritises business tiers and the API, reinforcing GPT 5 as a work tool first.

Practical limits and pricing. GPT 5 family models in ChatGPT and the API support large contexts and high output ceilings, with pricing that encourages broad usage while keeping a premium for heavy reasoning. In ChatGPT, a “mini” tier takes over when usage caps are hit - again prioritising seamless product behaviour over raw maximal capability.

Why this delights users - and disappoints AGI futurists

If you came for a singular, discontinuous leap toward AGI, GPT 5 will feel incremental. If you came to build or deploy products, it is a significant step. The router abstracts model choice. New control surfaces abstract cost quality trade offs. Tool use reliability lifts completion rates for end to end tasks. The result is less cognitive overhead for users and less orchestration glue for teams shipping software.

This is the arc several thoughtful commentators anticipated - progress bending toward product, not just raw IQ. As Nathan Lambert put it, GPT 5 looks like the “traditional tech path” where performance, price, and product all matter, rather than a single capability jump that flips the AGI switch. In other words, it is a consolidation of strong capabilities into a more usable, cheaper package - a win for adoption, not a revelation for AGI timelines.

Meanwhile, usage at consumer and business scale continues to compound - OpenAI cites nearly 700 million weekly ChatGPT users and over 5 million paid business users across Team, Enterprise and Edu - evidence that usability upgrades are the real growth lever. GPT 5 rolls out first to teams and developers, with an extended reasoning “Pro” option for the heaviest workloads.

“Progress is bending toward product, not just raw IQ.”

The product thesis - abilities will develop more slowly than products

A modern AI product is a system of systems: foundation models, reasoners, retrievers, tools, graphs, caches, policies, and telemetry. The competitive edge comes from how these pieces are composed, evaluated, and maintained in production. GPT‑5 strengthens the composition layer. That is where revenue is being made today.

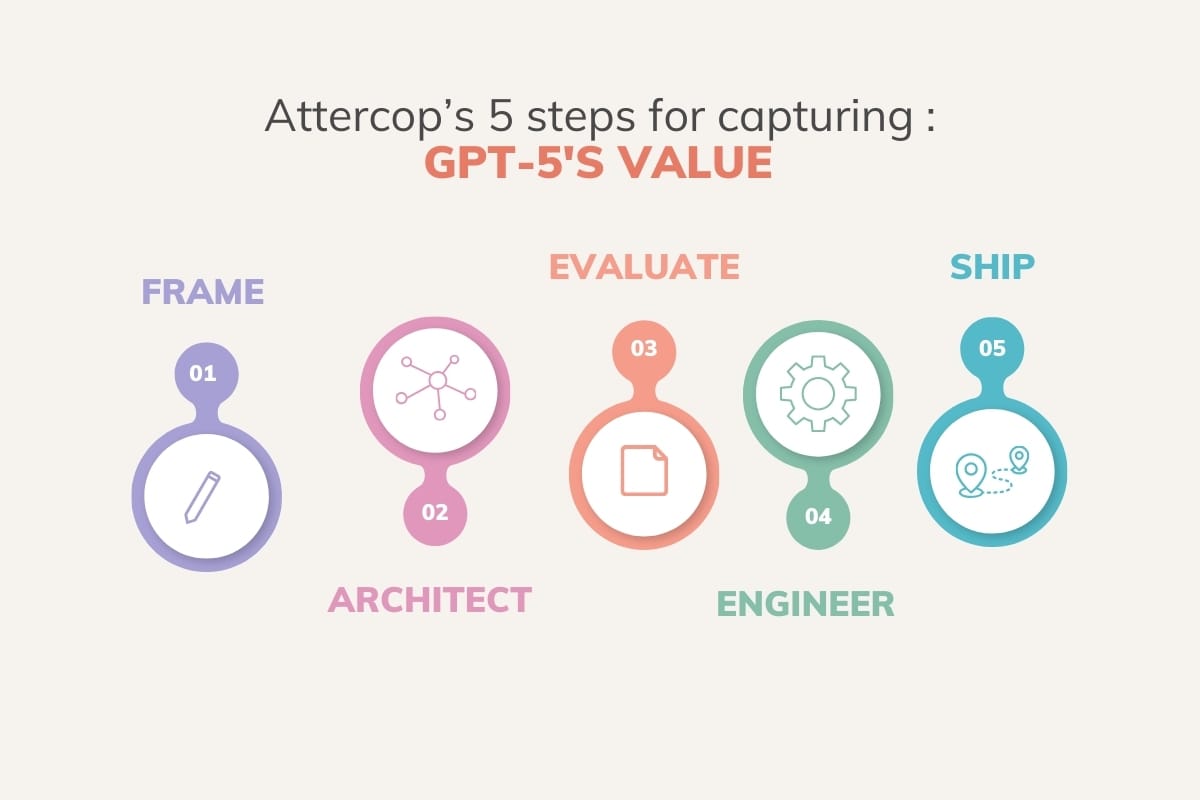

To make this concrete, here is how we at Attercop guide clients to capture GPT‑5’s value right now.

1) Frame problems as jobs, not prompts

Write job stories that capture context, constraints, and success criteria.

Map each job to capabilities: retrieval, enrichment, structured planning, tool calls, human review.

Decide where to spend “thinking” - use minimal reasoning where acceptable, reserve deeper reasoning for the few steps that truly need it.

2) Architect for multi‑model, multi‑tool execution

Use the router in ChatGPT for end user flows; in your stack, build your own routing for model families and tools - GPT 5’s control surfaces make that cheaper.

Treat tool use as a contracted interface with strict schemas, retries, and compensating actions.

Add knowledge layers where it pays off - RAG for breadth, GraphRAG or a knowledge graph where entity precision and provenance matter.

3) Build an evaluation and observability spine

Pre prod - task aligned evals: SWE bench variants for code agents, τ² bench style scenarios for tool chains, and instruction following checks like COLLIE or MultiChallenge where UX depends on fidelity.

Prod - capture tool traces, failure modes, and cost latency quality metrics by step. Target agentic success rate and time to correct result as north star KPIs.

Gate releases on deltas that matter to users - not just benchmark wins.

4) Engineer for reliability and safety by design

Prefer structured outputs and grammar constrained tools for determinism.

Adopt safe completions principles in your own policy layer - offer compliant alternatives rather than dead end refusals.

Codify “deviation budgets” for reasoning tokens and tool calls to keep bills predictable.

5) Ship the surrounding product

Memory, connectors, and collaboration UX are not nice to haves - they unlock adoption. GPT 5 brings these natively in ChatGPT, and you should mirror them in your enterprise apps.

What to watch next

Router quality and transparency - how well auto routing aligns to business SLAs and how much control enterprises get over it.

Cost aware reasoning - developers will aggressively tune reasoning_effort and verbosity to hit latency and margin targets. Expect patterns to emerge.

Agentic reliability - fewer flaky tool chains and more long running task success is the line that matters most for enterprise value.

Safety that helps rather than blocks - safe completions is directionally right for dual use domains where a flat refusal destroys UX.

Bottom line

GPT 5 cements the idea that capability is necessary but insufficient. The growth - and defensibility - now lives in products that compose multiple models and tools into dependable, observable systems. For consumers, that feels like “it just works”. For AGI futurists, it feels like a slower arc. For operators and builders, it is exactly the arc we need: steady, usable progress that compounds.

Curious about what Attercop is building with AI beyond the headlines?

Check us out at attercop.com, join the conversation on LinkedIn, or send us a note at [email protected].

And if this piece made you think, subscribe to our newsletter for the next one.

Reply